Research Themes

Research on Understanding Emotional Expression and Recognition Mechanisms to Develop AI for Enhancing Human Well-being

We are conducting research to understand the mechanisms of human emotional expression and recognition from social functional and informatics perspectives, aiming to develop AI that enhances people’s well-being.

Why Did Emotions Evolve? — A Theory of Mind for Overcoming Social Dilemmas

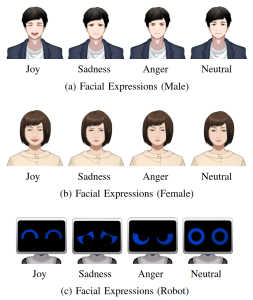

Emotions not only express internal values but also function to influence others. Smiles deepen relationships, anger elicits submission, and regret repairs relationships. Through collaborative research with the US Army Research Laboratory, University of Southern California, and University of Lisbon, we have investigated these “social functions of emotions” through multiple approaches: human interaction experiments with virtual agents and avatars, evolutionary simulations, and computational cognitive models using Bayesian Theory of Mind. Specifically, using the “repeated prisoner’s dilemma” game, where participants make simultaneous decisions with the same partner multiple times, we examined how people change their decisions not only based on whether their partner is cooperative or competitive, but also based on the emotions their partner expresses. Results showed that humans infer intentions from emotions expressed by others, becoming more cooperative toward those who express cooperative emotions while withholding cooperation from those showing competitive attitudes and emotions (de Melo & Terada, 2019, 2020; de Melo, Terada, & Santos, 2021). Furthermore, using evolutionary simulations, we demonstrated that such emotional expressions play an important role in the evolution of indirect reciprocity through reputation, and that emotional social norms function as mechanisms that promote the evolution of cooperation even in error-prone situations (Fonseca et al., 2025). We also confirmed that computational cognitive models theoretically derived from interdependence theory and emotion appraisal theory can quantitatively explain the decision-making process of how humans interpret others’ emotions and adjust their own behavior (Terada et al., 2025).

- de Melo, C. M. & Terada, K. (2019). Cooperation with autonomous machines through culture and emotion. Plos One, 14(11), 1–12. https://doi.org/10.1371/journal.pone.0224758

- de Melo, C. M. & Terada, K. (2020). The interplay of emotion expressions and strategy in promoting cooperation in the iterated prisoner’s dilemma. Scientific Reports, 10, 1–8. https://doi.org/10.1038/s41598-020-71919-6

- de Melo, C. M., Terada, K. & Santos, F. C. (2021). Emotion expressions shape human social norms and reputations. iScience, 24(3), 1–9. https://doi.org/10.1016/j.isci.2021.102141

- da Fonseca, H. C., de Melo, C. M., Terada, K., Gratch, J., Paiva, A. S. & Santos, F. C. (2025). Evolution of Indirect Reciprocity under EmotionExpression. Scientific Reports. https://doi.org/10.1038/s41598-025-89588-8

- Terada, K., de Melo, C. M., Santos, F. C. & Gratch, J. (2025). A Bayesian Model of Mind Reading from Decisions and Emotions in Social Dilemmas. Proceedings of the 47th Annual Meeting of the Cognitive Science Society (CogSci 2025).

Adaptive Regulation of Human-AI Cooperation through Emotional Expression

This research demonstrated that emotional expressions by AI agents are crucial mechanisms for appropriately regulating cooperation between humans and AI agents. Using the prisoner’s dilemma game, experiments revealed that AI agents’ emotional expression patterns can intentionally modulate human cooperative behavior. In particular, we showed that when agents display self-sacrificial emotion expressions (sadness when cooperating, joy when exploited), people can pursue their self-interest without feeling guilty. This occurs through a mechanism called Reverse Appraisal, where people infer the mental states and preferences of others from their emotional expressions. These findings have direct applications for designing emotional interfaces that enable effective collaboration with AI while reducing productivity loss and risks that may arise when humans become excessively cooperative with AI agents in various contexts, such as chatbot interactions, disaster relief, or military operations.

- Emotional Expression Help Regulate the Appropriate level of Cooperation with Agents, Ryoya Ito, Celso M. de Melo, Jonathan Gratch and Kazunori Terada, The 12th International Conference on Affective Computing and Intelligent Interaction (ACII ’24), 2024.

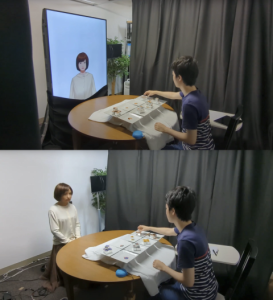

Impact of Uncanniness in Emotional Expression on Human-AI Negotiation: Comparing Virtual Agents and Androids

This paper examined the differences in effectiveness between virtual agents (virtual characters) and androids (human-like robots) in negotiation scenarios using emotional expressions. In the experiment, 82 participants engaged in non-verbal negotiation games with either virtual agents or android robots that displayed emotional expressions. Results showed that participants could more accurately infer the preferences of virtual agents compared to androids, and achieved better integrative solutions (mutually beneficial deals). This effect was explained by the “uncanny valley” phenomenon, where participants felt uncanniness toward androids displaying emotional expressions (especially joy), which hindered effective negotiation. This research suggests that in designing decision support systems that utilize emotional expressions, the naturalness and interpretability of emotional expressions are more important than merely having a physical embodiment.

- People Negotiate Better with Emotional Human-Like Virtual Agents than Android Robots, Motoaki Sato, Takahisa Uchida, Yuichiro Yoshikawa, Celso M. de Melo, Jonathan Gratch and Kazunori Terada, The 12th International Conference on Affective Computing and Intelligent Interaction (ACII ’24), 2024.

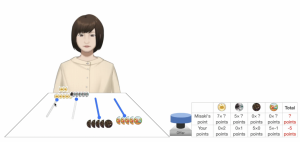

AI for Effective Negotiation: The Impact of Local Query Strategies for Reading Preferences from Emotional Expressions

This research examined the effectiveness of a preference exploration strategy called “local query” in human-AI negotiations. Local query is a method for understanding the negotiation partner’s preferences (what they value) by focusing on one item while keeping others fixed and observing the partner’s reactions. In this study, we exclusively used emotional reactions. In the experiment, 76 participants engaged in negotiation games with AI, comparing the outcomes of groups who had learned the local query strategy beforehand with those who had not. Results showed that participants who learned the local query strategy could more accurately understand the AI’s preferences and make proposals that were advantageous for the AI (Win-Win solutions) without sacrificing their own interests. This research provides insights into the process of understanding partners through emotional expressions in AI negotiations, contributing to the development of AI that supports better decision-making.

- Motoaki Sato, Kazunori Terada. (2025). The Effect of Local Query on Win-Win Solutions in Human-AI Negotiations. HAI Symposium 2025.

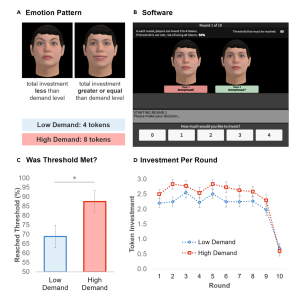

Overcoming Collective Risk through Emotional Expression: The Impact of Anger and Joy Expressions on Solving Social Dilemmas

This research investigated the effect of emotional expressions in “collective risk situations,” such as climate change measures. A collective risk dilemma is a situation where participants must sacrifice individual benefits to achieve a common goal (threshold), or everyone faces disaster risk. In the experiment, we set up scenarios where participants cooperated with either a “high-demand group” (expressing anger when investment was low) or a “low-demand group” (expressing joy when investment was high). Interestingly, participants who cooperated with groups expressing the negative emotion of “anger” invested more and achieved higher threshold success rates than those who cooperated with groups expressing the positive emotion of “joy”. These results suggest that emotional expressions can convey group demand levels and expectations, potentially promoting social cooperative behavior. This research reveals that non-verbal communication through emotional expressions plays an important role in resolving social dilemmas and provides valuable insights for developing AI that supports better decision-making.

- Emotion Expression and Cooperation under Collective Risks, Celso M. de Melo, Francisco C. Santos and Kazunori Terada, iScience, Vol. 26, No. 11, pp. 108063, 2023. https://doi.org/10.1016/j.isci.2023.108063

Research on the Ambiguity of Smiles — The Function of Emotional Expression in Social Contexts

This research focused on the diverse social functions of smiles and experimentally examined their relationship with politeness social norms. In human communication, emotional expressions are not merely expressions of internal states but have social functions that influence others. Particularly noteworthy is the phenomenon where smiles take on multiple meanings in social environments where expressions of anger and sadness are suppressed while smiles are enforced in public settings. Using scenes of bullying between agents in the experiment, it was revealed that anger and dominant smiles produce similar perceptions of dominance, and that sadness has the function of emphasizing submission and eliciting assistance. These findings demonstrate the social functions and context-dependency of emotional expressions, deepening our understanding of human non-verbal communication and representing an important step toward developing AI capable of more natural interpersonal communication.

- The Ambiguity of Smiles – Co-evolution of Politeness Social Norms and Pragmatic Smiles, Haruma Matsuda, Kazunori Terada, Proceedings of the 38th Annual Conference of the Japanese Society for Artificial Intelligence, 2024.

Development of AI that Understands Human Preferences and Thinking to Support Better Decision-Making

We are developing AI that understands human preferences and thinking to support better decision-making.

The Effect of Personalized Persuasion on Belief Manipulation

In collaboration with Chubu Electric Power Co., Inc., we proposed a persuasion method that presents information based on detailed individual beliefs and values. Based on multi-attribute utility theory, we conducted persuasive dialogues that presented counterarguments to the features that each individual evaluated most negatively in order to change people’s attitudes toward fully autonomous vehicles. In an experiment with 197 participants, personalized persuasion improved the sense of obligation that “autonomous vehicles should be socially accepted,” but did not affect monetary value or desire to ride. These results suggest that “wanting to” desires and “should” obligations are processed by different mechanisms in the brain, and that personalized belief manipulation influences higher cognitive processes while having little effect on personal desires. The findings of this research have potential applications in attitude change not only for autonomous driving technology but also in various fields involving complex cognitive and motivational processes, such as healthcare, education, and environmental behavior.

- Persuasion by Shaping Beliefs about Multidimensional Features of a Thing, Kazunori Terada, Yasuo Noma and Masanori Hattori, Proceedings of the 23rd International Conference on Autonomous Agents and Multiagent Systems (AAMAS ’24), pp. 2510-2512, 2024. https://dl.acm.org/doi/10.5555/3635637.3663210

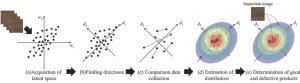

Sensory Inspection Technology for Wood Grain Images Using GAN Latent Space Exploration

In collaboration with Professor Kunihito Kato of Gifu University and Yamaha Corporation, we developed an innovative method utilizing GAN latent space exploration for sensory inspection of wood grain, which has significant individual differences in wooden products. By acquiring the latent feature space of wood grain images with StyleGAN2 and discovering directions for manipulating image elements of wood grain (stripe patterns, clarity, color tones, etc.) with GANSpace, we successfully quantitatively estimated the discrimination boundary between good and defective products based on inspectors’ sensibilities. This technology is expected to contribute not only to the efficiency of quality control but also to skill transfer and standardization of inspection criteria by having AI learn and mathematically model the sensory judgment criteria of skilled inspectors that are difficult to verbalize.

- Sensory Inspection of Wood Grain Images Using GAN Latent Space Exploration, Tomoya Nakamura, Kunihito Kato, Kazunori Terada, Michikazu Takahashi, Yasuo Shiozawa, Hiroaki Aizawa, Journal of the Japan Society for Precision Engineering, Vol. 89, No. 2, pp. 213-220, Japan Society for Precision Engineering, 2023. (Paper Award Winner)

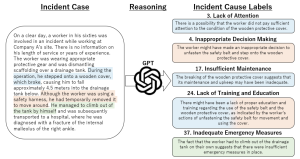

Causal Inference of Labor Accident Texts Using Large Language Models

In collaboration with Chubu Electric Power Co., Inc., we developed a multi-label annotation method utilizing GPT, a large language model (LLM), for text data related to labor accidents that occurred at power companies. Traditionally, unstructured text within organizations has been difficult to efficiently accumulate and reuse as knowledge, but in this research, we verified LLM’s zero-shot and one-shot textual entailment inference capabilities and conducted experiments to automatically assign abstract category labels for accident causes. Particularly, the one-shot approach utilizing prompt engineering demonstrated GPT’s strong generalization ability, achieving performance close to that of human annotators. Such methods are very important in developing AI that supports human decision-making. By accurately analyzing and structuring accident causes, the decision-making process for developing preventive measures, safety education, compliance observance, and risk management can be effectively supported. This research represents a practical application of AI technology that supports better organizational decision-making by complementing human knowledge and experience and extracting useful patterns from text data.

- Few-Shot Multi-Label Annotation of Causes for Incident Texts Using Large Language Models, Manato Nakamura, Kazunori Terada, Satoru Hayamizu, Hattori Masanori, Takafumi Fuseya and Hidetoshi Iwamatsu, Proceedings of the 28th International Conference on Knowledge-Based and Intelligent Information & Engineering Systems (KES ’24), 2024. https://doi.org/10.1016/j.procs.2024.09.501

Development of AI to Support Social Communication for People with Autism Spectrum Disorder

We are developing AI to support social communication for people with autism spectrum disorder.

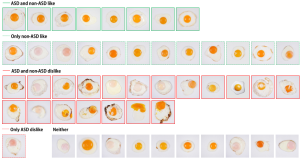

Visual Aspects Related to Picky Eating in Autism Spectrum Disorder

In this research, in collaboration with Professor Hirokazu Kumazaki (Nagasaki University), Professor Kazuhiro Ueda (University of Tokyo), Professor Masashi Komori (Osaka Electro-Communication University), and Professor Kunihito Kato (Gifu University), we investigated whether adults with Autism Spectrum Disorder (ASD) have specific preferences for the appearance of food. Specifically, 50 images of fried eggs were used to evaluate which images adults with and without ASD felt they “wanted to eat.” The results showed that adults with ASD had a narrower range of images they felt they “wanted to eat” compared to non-ASD adults, and tended to prefer fried eggs with simple and regular appearances. This suggests that the tendency to prefer visual simplicity may be related to the picky eating and food selectivity exhibited by people with ASD.

- Terada, K., Kumazaki, H., Ueda, K., Nishikawa, N., Yoshida, H., Taki, Y., Fujii, S., Komori, M. & Kato, K. (2024, May 16). Autistic Adults Have Selective Visual Appetite for Fried Eggs. The International Society for Autism Research Annual Meeting 2024.

Predicting Autism Using a Simple Motor Test

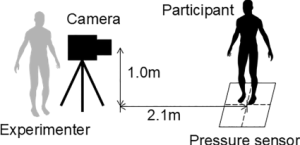

In this research, in collaboration with Professor Yoshimasa Ohmoto (Shizuoka University), Professor Hirokazu Kumazaki (Nagasaki University), and others, we investigated whether artificial intelligence (machine learning) could analyze body movements during a one-legged standing test to simply and accurately predict children with strong Autism Spectrum Disorder (ASD) traits. By collecting data on body sway and shoulder and hip movements when children stood on one leg and training machine learning models with this data, we demonstrated that children with high ASD traits could be distinguished with high accuracy. Shoulder and hip movements were found to be particularly important indicators, suggesting that the one-legged standing test, which can be performed easily in a short time, may be effective for early detection and screening of autism.

- Ohmoto, Y., Terada, K., Shimizu, H., Kawahara, H., Iwanaga, R. & Kumazaki, H. (2024). Machine learning’s effectiveness in evaluating movement in one-legged standing test for predicting high autistic trait. Frontiers in Psychiatry, 15. https://doi.org/10.3389/fpsyt.2024.1464285

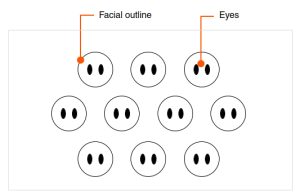

Virtual Audience with Simple “Eyes” Reduces Stress for People with Autism

In this research, in collaboration with Professor Hirokazu Kumazaki (Nagasaki University) and others, we investigated the effectiveness of training with a simple virtual audience to reduce the “anxiety of speaking in public” experienced by people with Autism Spectrum Disorder (ASD). This virtual audience was designed with a simple facial design, particularly created to direct attention to the “eyes.” When people with ASD practiced speaking in front of this virtual audience, their confidence in public speaking improved, and their physiological stress (cortisol levels in saliva) decreased significantly. These results suggest that a virtual environment with a simple design that focuses attention on the eyes may be effective in reducing anxiety and improving confidence when people with ASD speak in public.

- Kumazaki, H., Muramatsu, T., Kobayashi, K., Watanabe, T., Terada, K., Higashida, H., Yuhi, T., Mimura, M. & Kikuchi, M. (2020). Feasibility of autism-focused public speech training using a simple virtual audience for autism spectrum disorder. Psychiatry and Clinical Neurosciences, 74(2), 124–131. https://doi.org/10.1111/pcn.12949

Intentional Stance or Design Stance: Do Humans Perceive “Intention” in Robots?

Robots are essentially just machines. However, humans can sometimes perceive a mind or intention in what is otherwise an inanimate mechanism. In these studies, we explore how human cognitive strategies alternate between two perspectives known as the “intention stance” and the “design stance.” Specifically, we experimentally examine whether people treat the behaviors of robots or artificial agents as if they arise from intentional entities, or whether they are understood as mere mechanical algorithms, with the aim of gaining insights for more natural human–robot interactions.

Do Reactive Robot Movements Make Humans Attribute Intention?

This study investigates how humans interpret a non-humanoid robot’s movements as indicative of some “intention” on the robot’s part. Concretely, experiments were carried out using chair-shaped and cube-shaped robots, comparing conditions where the robots moved responsively in sync with a person’s actions against conditions where the robots moved periodically in a purely mechanical fashion. The results showed that when a robot’s movements seemed to follow the person’s behavior, people were more inclined to see them not as simple physical phenomena but as “watching and responding,” or “acting with a goal or purpose.” Furthermore, whether the robot’s appearance was functional (like a chair) or more abstract (like a cube) influenced how strongly participants attributed intention or a goal to it, offering valuable insights for designing robots that naturally interact with humans.

- Terada, K., Shamoto, T., Mei, H. & Ito, A. (2007). Reactive Movements of Non-humanoid Robots Cause Intention Attribution in Humans. IEEE/RSJ International Conference on Intelligent Robots and Systems, 2007 (IROS 2007), 3715–3720. https://doi.org/10.1109/IROS.2007.4399429

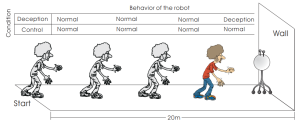

Can Robots Deceive Humans?

In this study, researchers examined whether people might feel “deceived” (i.e., attribute deceptive intentions) when a robot unexpectedly defies their predictions. Concretely, the rules of a Japanese children’s game similar to “Red Light, Green Light” were introduced, and the robot was deliberately programmed to look back at times or speeds that differed from human expectations. The results showed that when a robot, which normally moves at a consistent pace, suddenly turns quickly to “catch” a person’s movement, participants often felt “outsmarted” or “deceived.” This suggests that even in a mechanical device, people may detect or project strategies and intentions under certain circumstances.

- Terada, K. & Ito, A. (2010). Can a Robot Deceive Humans? Proceeding of the 5th ACM/IEEE International Conference on Human Robot Interaction (HRI 2010), 191–192. https://doi.org/http://doi.acm.org/10.1145/1734454.1734538

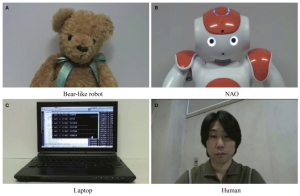

Human-Like or Mechanical? Reading Intentions vs. Reading Behaviors in a Competitive Game

In this study, participants played competitive games against agents that either had a human-like appearance and mannerisms or a more mechanical look, to see how they understood and predicted the agents’ behavior, as well as the strategies they employed. Specifically, multiple rounds of a zero-sum game (a penny-matching game) were conducted, in which the opponent sometimes shifted from predictable behaviors to sudden “deceptive” tactics. The question was whether participants would treat the opponent as an intentional entity or merely a set of algorithmic responses. The findings indicate that humans tend to attribute mental states more readily to agents with a human-like appearance, thus adopting less predictable mixed strategies, whereas with more mechanical-looking agents, they recognize exploitable patterns (predictable sequences) and employ strategies to leverage that predictability. This highlights how people switch between an intention stance and a design stance based on the agent’s appearance and observed behavior.

- Terada, K. & Yamada, S. (2017). Mind-Reading and Behavior-Reading against Agents with and without Anthropomorphic Features in a Competitive Situation. Frontiers in Psychology, 8, 1–10. https://doi.org/10.3389/fpsyg.2017.01071